Table of content

The notable advantage of data science over mainstream statistical practices is that it can extract insights and build accurate predictions from the junk of irrelevant data. That’s how sports analysts and team selectors in cricket and football could find the most accurate KPIs and build a team that can be regarded as the best suitable option against the rival teams.

To build such intelligent models, data scientists are trained and employed to produce solutions in machine learning data models. This blog post reveals the crucial steps the data science project manager and all the stakeholders need to go through to deal with complex data science projects.

Defining Data Science

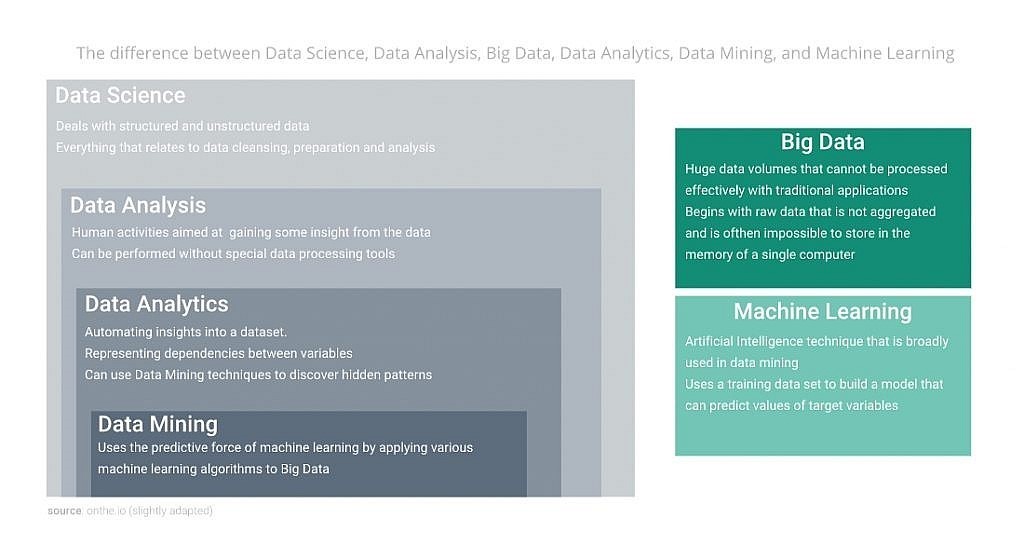

To free from any confusion or misconception, it’s essential to clear your perspective about data science and its related methodologies. Data science itself is complicated to explain in an absolute frame of reference. If you ask different Data Science experts about the absolute definition of the theme, you will be bombarded with aspects and ideologies of varying degrees.

Also Read: Data Science and Machine Learning In Demand Forecasting For Retail

The more straightforward way to define Data science is a combination of different fields of mathematics and computer science, specifically statistics and programming that deals with the practices of extracting insights and hidden patterns from lengthy datasets. Since the dependence of businesses on data increases at a high pace, the need to grasp the concept of data science and its related methodologies is the primary step to solve most of the data-related problems.

Data science and its related concepts

Despite being a technical methodology, managing a data science project is not a purely technical routine. It is a combination of both hard and soft skills. Like any other project, a data science project needs a roadmap that will guide you along the way of problem-solving. The Following rules will lead you to successful project completion while allowing you to save your valuable resources, time, and money.

Data Science Project Management

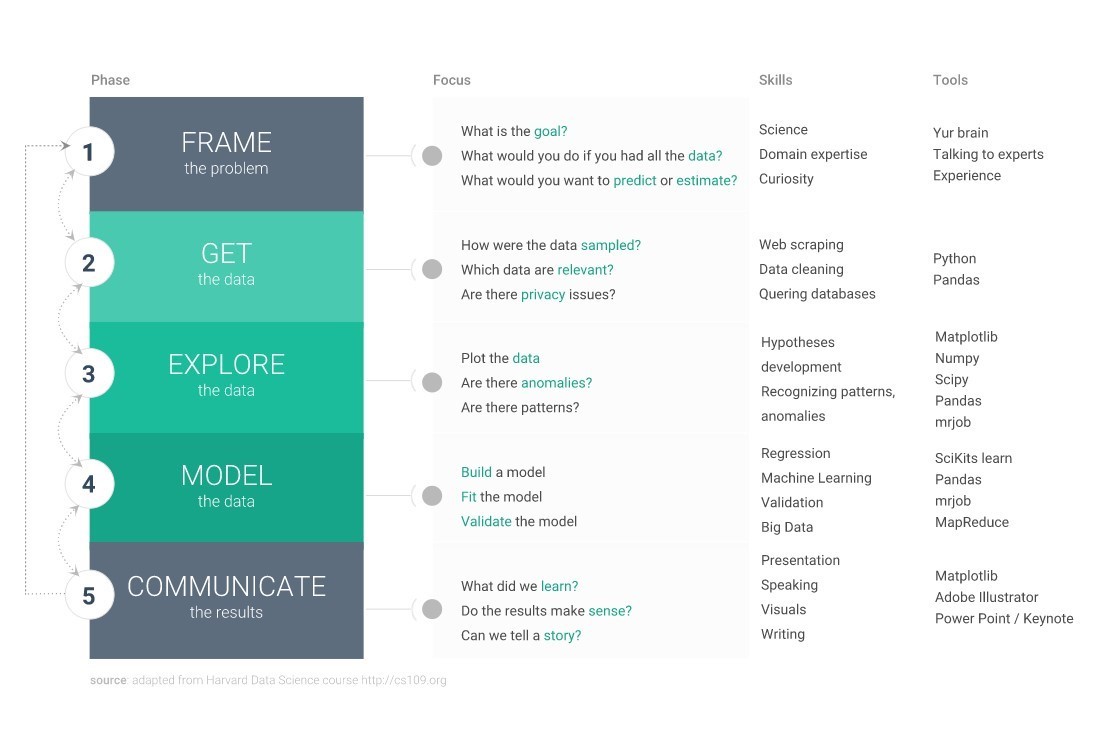

The Data Science Project involves a lifecycle to ensure the timely delivery of data science products or services. Like Agile and Scrum methodology, it is a non-linear, repetitive process richly inhabited with the critical queries, updates, and iterations based on further research and exposure to unseen depths of data as more information becomes available.

Let’s have a deeper look into how these steps allow you to handle your data science project efficiently.

Step 1: Frame the problem

Every data science project should be initialized to understand business workflows and their related challenges or hurdles. In this segment, all the stakeholders on board play a vital role in defining the problems and objectives. Even a negligible aspect of the business model should be taken into consideration to avoid any trouble later. The destiny of your project is determined at this level, making this step one of the toughest and crucial ones. But when carried out correctly, this phase can promise you a lot of savings in energy, cost, and time.

Ask business owners what they are trying to acquire. Being an analyst, the biggest challenge you will face here is to translate the blurry and obscure requirements into a well-explained and crystal-clear problem statement. The technical gap between stakeholders and experts can push a lot of communication-related problems in the process.Input from the business owner is essential, but in many cases, not substantial enough, or he/she may not convey his/her thoughts properly. A deeper understanding of business-related problems and technical expertise can help you in filling this gap. Don’t hesitate to learn anything from the sponsors regarding their domain if necessary.

A clear and concise storyline should be the primary outcome at this stage.

Since the framework is repetitive, rolling back to this stage will occur in later stages. Try to form a firm foundational basis of your project that everyone can understand and agrees on.

Step 2: Get the data

Now you can shift your focus to data gathering. You will find structured, unstructured, semi-structured; all types of data useful in different ways. Multiple data sources can cause a lot of confusion and provide space for creativity and alternatives. Potential data sources, including websites, social media, open data, or enterprise data, can be merged into data pools. The data has been identified but is not ready to be used. Before using it for the more significant motive, you need to pass it through several channels, from importing and cleaning to labeling and clustering. Cleaning the data ensures that data is free from all the errors and anomalies and loaded well into the machine. Make sure the data you are using is correct and authentic. Neglecting this crucial aspect can lead to long-term damages. Importing the data is the most time-consuming exercise as it could consume around 70 to 90 percent of the overall project time. If all the data sources are well organized, it can fall to 50 percent. You can also spare your time and effort by automating some processes in data preparation.

Step 3: Explore the data

After ensuring the quality of the collected data content, you can initiate the exploration drive. Observe the organizational efficiency of existing labels, segments, categories, and other distribution formats. Analyze the links and connections in various attributes and entities. Visualization tools may save you most of your time and energy while allowing you to better understand the layout of data content better understand the structure of data content and uncover essential better insights. The first purposeful glance at the data reveals you most of the gaps and misunderstanding that might be overseen in previous steps. Don't hesitate to roll back to the earlier stages, specifically the data collection, to close the gaps.

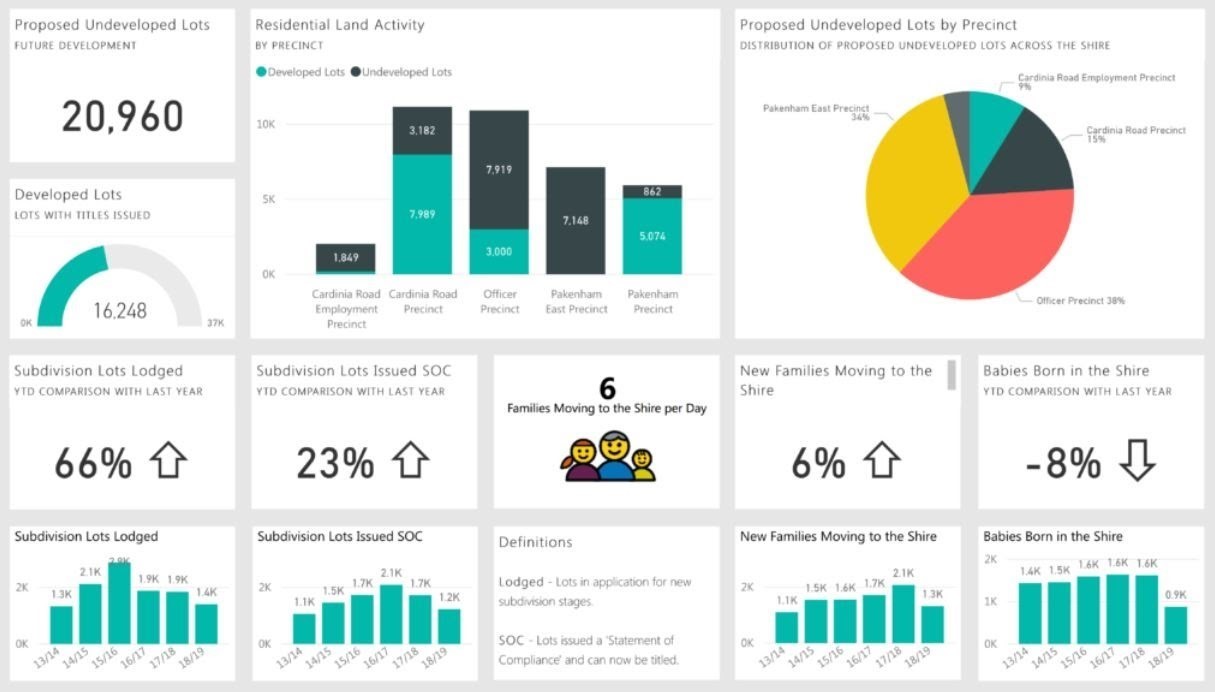

Example of data visualization tool to discover insights

The most troublesome phase in this step is to test those concepts and processes that are most likely to turn into insights. Revisit the results from the problem framing step to find some essential questions or ideas that will enable you to improve your data exploration drive. Like all the other projects, project duration, timelines, and deadlines will also restrict you from rolling back again and again. It bounds you to reconsider all the scenarios and aspects before revisiting the previous levels. Set your priorities aligned. Once you start observing clean patterns and insights, you are almost ready to model your data. Rethink your conclusions at every single step and filter your perspectives carefully. You are almost there.

Step 4: Model the data

At this critical level, you can perform the practical modeling of the data. Data scientists use a train set to train their machines to perform empirical predictive analysis and decision-making over a test set. A train set is a mighty chunk of the dataset with known output. This stage is highly repetitive. To make data align with the model, data scientists perform multiple adjustments to the parameters to best fit the data. Here fit means adjusting the parameters so that it can be used successfully with unseen data known as the test set. Once you’ve developed a model, you need to perform a validation test. This is how you ensure the accuracy and perfection of the model. If your model is either overfit or underfit, you need to rebuild it by changing the parameters and the size of the train set. Overfitting means the model gives accurate results only on the train set, and underfitting means the model delivers accurate results not even on the train set. The final product should be generalized, giving accurate results on both the training set and test set. Must ensure that your model addresses the business problem by revisiting the first stage. Keep in mind; not every model is perfect. The key to success is extracting the best out of your model in terms of accuracy and objectivity.

Step 5: Communicate the results

Last but not least, communicating the result means presenting your model to the business owner or sponsor. They certainly don’t know what to do with it and how it works. The more precise and clear the presentation, the easier for you to explain the project. Refrain from going into too many technical details; the client may not understand or may not be interested in knowing that. Document all your complex calculations and use easy wording to explain everything. Just Delivery of the model is not necessarily the last step in a data science project. It depends on the pact between you and the client. Sometimes, you and your developer team will deliver the developed model to those who will deploy it in a facility intended to perform. If you’re developing a predictive model used, for example, an e-commerce platform, you need to stay active on the server and extract insights and make predictions based on real-time customer data. If it is not part of your agreement, you must ensure that your whole work and calculations are documented to read like a user’s manual. Archive all of the methodologies that you’ve implemented. It includes evolution diagrams, graphs, and other visualizations of the data in every step, from raw form to cleaned information and the final model with relevant notes, comments, illustrations, and the programming code. By doing so, your client will be able to crack all the technical stuff you have done developing the model. If anybody needs to go back and verify the analysis, it becomes easier for them to use the guidelines and manual you provided.

Conclusion

The Data Science project demands immense transparency and collaboration. Irrespective of how much expertise you have, you cannot build an accurate model without knowing the crux of the business process. In the same way, business owners, CEOs, managers, and sales executives cannot achieve success without data science in a highly dynamic market. Refrain from working in isolation. Communicate with all the involved stakeholders and don’t hesitate to visit any previous stage if necessary. For the sake of authenticity, use authentic and information-rich data sets or results will always be the same or imperfect, while expenditure will be much higher in terms of time and money.